The Future of Autonomy Needs Open Standards

January 04, 2023

Blog

OpenVX 1.3 is the modern solution the industry needs for automating computer vision and perception tasks. But first, what is computer vision, and why is it important?

As humans, we perceive the world around us remarkably well, simply by allowing light to enter our eyes. Discreet points of light hit different areas of the retina and signals travel into our brains where information is pieced together. This is how a scene begins to form. Emulating this process in digital systems was for the longest time considered intractable.

We have been using digital cameras to capture light information for a while. These cameras are now capable of incredibly high resolutions, and yet we have struggled with artificial vision. The reason of course is that the camera, just like our eye, is only a small part of vision. It is the bit that happens in the brain that makes the distinct points of light interpretable. This is the process in the pipeline that computer scientists and scientists of different branches have struggled to replicate in artificial systems.

Within the last ten years, the field of computer vision has benefitted immensely from an explosion in machine learning research. Deep Learning algorithms like convolutional neural networks (CNNs) have propelled the field of computer vision forward, with capabilities that only a decade ago were considered impossible for a machine to achieve.

Now, vision algorithms are capable of recognizing objects in photographs. They can distinguish between pedestrians and automobiles in urban environments. They can also recognize troubling signs of disease in pathology images. This achievement has been so successful that a new industry has been launched: the Autonomy industry.

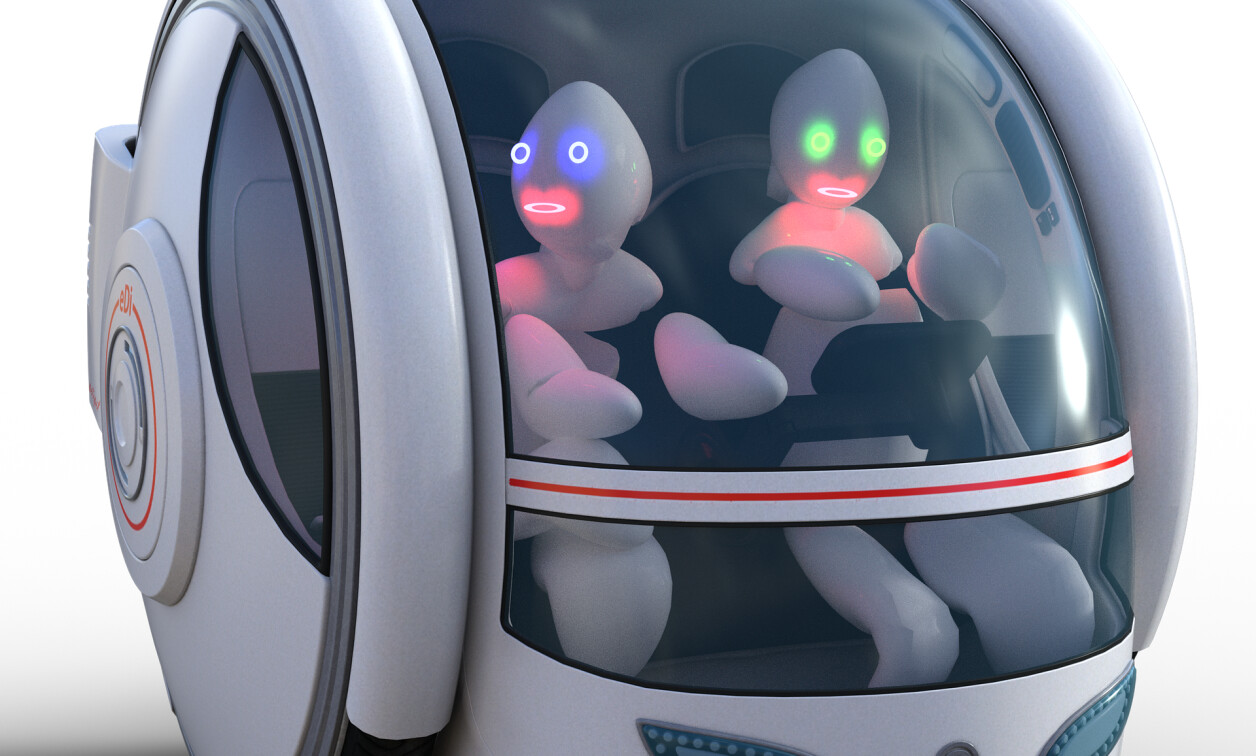

Imagine a future where transportation is handled by autonomous vehicles roving around urban areas, awaiting a summon from a nearby passenger. Consider walking into a surgery room to be operated on by a machine that’s been trained to perform a routine surgery or boarding a pilot-less airplane for a quick flight to Europe. These scenarios are still in the future, but they are no longer the stuff of science fiction. In some ways, all these examples exist in our labs today. And all this is only possible because of advancements in computer vision. Computer vision enables systems to interpret and react to changes in their environment.

Considering the breakthroughs in computer vision in the past decade, the next hurdle we must overcome is decoupling our software solutions from the hardware in which they execute. You see, the current machine learning revolution, of which computer vision is a benefactor, was made possible not due to some advancement in obscure theories. Instead, the leap forward came in the realization that we could use Graphics Processing Units (GPUs) as accelerators to accelerate the computations involved in running deep neural networks. That is, the solution came from writing software that could specifically target a hardware accelerator to perform computations faster than anyone thought possible.

A decade of the Age of Autonomy stands before us, it is important that we build the foundations of the software that will empower the autonomous systems of the next decade using software that is decoupled from the underlying hardware, whilst still being capable of tapping into the required acceleration resources.

That is the benefit open industry standards like the Khronos® OpenVX™ 1.3 can provide. A software stack that is built on top of an open standard can move to new and evolving hardware ecosystems, freely.

OpenVX 1.3 is a modular API based on a computer vision feature set and a neural network inferencing feature set. The API defines a computational graph where each node in the graph constitutes an operation that’s defined as part of one of the feature sets, or as a user-provided kernel. The advantage of a computational graph as an execution mode is that it presents ample opportunity for an implementation to accelerate and optimize the algorithms according to the underlaying hardware platform. While the API presented to the application is standard amongst all implementors, each implementor can differentiate their solutions by how they manage the computational graph and how well it can be optimized according to the underlying platform.

What do the feature sets look like?

The computer vision feature set provides a collection of functions that enable an application to perform classic image processing tasks. These functions are provided as high-level APIs that an application can easily call into without worrying about the underlying hardware. It is the OpenVX implementation’s job to consider acceleration under a given hardware platform.

Although image processing and traditional computer vision APIs are still an important part of a robust vision pipeline, state-of-the-art solutions today involve deep learning algorithms. OpenVX 1.3 enables applications to inference trained neural network models through two easy methods:

- An application can build a neural network at runtime by issuing discreet function calls (vxConvolutionLayer, vxActivationLayer, vxFullyConnectedLayer, vxSoftmaxLayer, etc) and providing the relevant weight and bias parameters to the API.

- The application can import a trained model into the OpenVX computational graph as a single node in the graph. The importing of trained models is done through an import/export extension supporting the Neural Network Exchange Format (NNEF) standard.

For those looking to build the platforms that will power the decision-making and perception engines of the Autonomy Industry, there are two fundamental problems they need to solve. First is scalability. The platforms they build will need to be resilient to change and must be adaptable to the evolving hardware accelerator landscape. The solution to this problem is decoupling software from hardware using industry standard APIs like OpenVX 1.3.

The second fundamental problem designers must solve is safety and reliability. In this respect too, OpenVX 1.3 provides a solution. The OpenVX 1.3 specification provides a safety critical profile consisting of a subset of functions aimed for deployment. These are the functions that will run on the edge device and therefore are guaranteed to execute deterministically by the implementation provider.

In a single API, Khronos provides the solution to scalability and reliability for the future of computer vision and the Autonomy industry. That API is OpenVX 1.3.

A listing of the OpenVX 1.3 computer vision APIs:

vxAbsDiff, vxBox3x3, vxChannelExtract, vxConvolve, vxErode3x3, vxGaussianPyramid, vxHistogram, vxLaplacianReconstruct, vxMedian3x3, vxNonLinearFilter, vxOr, vxScaleImage, vxAdd, vxCannyEdgeDetector, vxColorConvert, vxDilate3x3, vxFastCorners, vxHarrisCorners, vxIntegralImage, vxMagnitude, vxMinMaxLoc, vxNot, vxPhase, vxSobel3x3, vxAnd, vxChannelCombine, vxConvertDepth, vxEqualizeHist, vxGaussian3x3, vxHalfScaleGaussian, vxLaplacianPyramid, vxMeanStdDev, vxMultiply, vxOpticalFlowPyrLK, vxRemap, vxSubtract