Embedded Systems: The Big Picture

April 14, 2021

Story

Over my 30+ year career, I've worked in various roles within the hardware value chain and have been amazed (and frustrated) at the extraordinary capability that’s being enabled out of effectively refined sand, in addition to the inability of the industry to extract relevant value for those hundreds of millions of transistors.

The recent trend and excitement around AI-based systems on a chip has breathed life into this area of the industry. It may inevitably be a relatively short-term aberration on the overall downward pressure on component pricing.

In recent years, I’ve focused on the software industry. During this time, I’ve begun noticing four big themes that may prove worrisome for system architects at the system level for embedded systems.

Consolidating multiple discrete subsystems on a single multicore processor

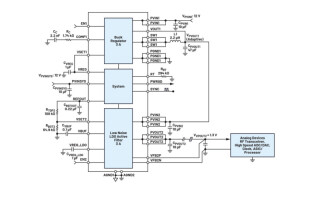

The incredible hardware innovations mentioned above, combined with simply extraordinary process technology, are delivering amazing levels of multicore processing horsepower, memory, and IO connectivity into “cheap as chips” (pun intended!) components. This offers the possibility for system architects to consolidate what was historically multiple discrete subsystems to run on a single multicore processor. This has the potential to save power, cost, and footprint of the electronics. Putting all the eggs in one basket though requires architects to ensure that these systems are resilient. Hypervisors are used to allocate resources to each of the applications, but care must be taken to ensure that systems are partitioned such that accidental or malicious failures do not cause catastrophic system issues. There cannot be a single point of failure, which means that an operating system cannot be responsible for managing all system resources.

Implementation of functionality into software instead of hardware

The shift toward the implementation of functionality into software, as opposed to in hardware, offers increased flexibility to the system architect to track emerging standards through the use of software updates. Some of the newer networking infrastructure components are effectively general-purpose servers. We are increasingly seeing functionality such as programmable logic control (PLC) functionality in the industrial segment shifting to software implementations. I believe we can expect industries like robotics and automotive shifting to software architectures over time. This shift requires the system architect to worry about how the system functionality needs real-time responses tighter than 1 millisecond. Constraints we hear from customers include:

-

About 1mS for PLCs

-

Low 100s of microseconds for controlling robotics

-

10s of microseconds for computer numerical control (CNC)

The approach that nearly all of our customers have adopted is one of mixed criticality systems where time insensitive tasks are supported using an operating system like Windows® or Linux, with the time sensitive code being implemented on real-time operating systems (RTOS) or even bare metal code. The RTOS is only part of the story though. On today’s SoCs that feature shared memory and IO, this is challenging and frankly needs very tight collaboration between the customer, the chip vendor and the software ecosystem.

Using the operating system to manage security policies and processes

The operating system is the wrong place to manage security policies and processes. One of the great benefits of IoT systems is the ability to connect to other systems. But one of the biggest disadvantages of IoT systems is the ability to connect to other systems. We see so many examples of a compromised OS being a pathway for cyberattacks that can extract valuable data or alter the system’s functionality. Instead, security policies should be implemented in a separate, provably isolated virtual machine, whether that be using bare metal code or a RTOS.

Closing the time gap between compromised system and recognition and mitigation of problem

More work must be done to close the time gap between a system being compromised, to that incursion being recognized and mitigated. Sometimes systems can be hacked for months before it is identified by the IT team of an Enterprise. The longer that time gap is, the higher the probability that the hacker can identify valuable resources. The hypervisor layer of the software stack is the right place for identifying and mitigating zero-day threats. In the Mirai attack of late 2016, the systems should have been able to recognize that sending traffic out to services like Spotify and Netflix was unusual behavior. If the system did recognize this, it could have taken action such as removing itself from the network or resetting itself to a known good state.

This is now even more critical if we consider the recent breaches of critical infrastructure such as water treatment plants and the demonstrations of hijacking semi-autonomous vehicles. This is one of the areas where machine learning has a strong play - the technology can be used to build up knowledge of what “normal” system behavior consists of (specific memory accesses, CPU utilization levels and a profile of IO behavior) and then flag secure system resources in the event of errant behavior occurring.

It is maybe no surprise that despite my shift into the software part of the technology industry, the largest proportion of my “marketing” work week is still focused on engaging with a broad set of hardware partners; IP providers, creators of SoCs and board level manufacturers!