Enabling a depth camera with Qualcomm's Snapdragon 820

March 29, 2017

Depth cameras are essential to the robotic world and are now available in different shapes and frameworks. To find an object or a path in the real wor...

Depth cameras are essential to the robotic world and are now available in different shapes and frameworks. To find an object or a path in the real world, robots need to have a sense of the surrounding environment. Most of these cameras use two cameras with certain distances and do heavy processing to create a 3D world from those two 2D captured video. In most cases, the original captured video needs to be passed to other modules for different purposes – that’s one of the missing parts on most existing depth camera modules. In this paper, we are introducing Qualcomm’s Snapdragon 820 as a solution for a real-time depth camera without losing the basic features of a dual camera system.

Qualcomm’s Snapdragon 820 is one of the more powerful SoCs in its series. This SoC has an eight core ARM CPU as an application processor that works with a few subsystems (like GPU, DSP, RPM, etc.). Hexagon DSP is a powerful DSP with multiple hardware threads, packet instructions, L1 and L2 cache, and access to peripherals and double data rate (DDR) like Application Processor Qualcomm (APQ) and very long instruction word (VLIW). In 820, version 6 of this structure is used. In this new structure, a Hexagon Vector eXtension (HVX) coprocessor that can work on 1024-bit vectors is provided.

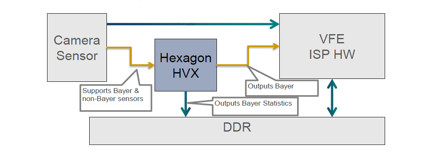

You can take an advantage of using HVX for high resolution image processing. One of the other features that Snapdragon 820 provides is the ability to add customized modules to the image signal processing (ISP) pipeline (Figure 1).

[Figure 1 | HVX module in ISP]

Having a customized module in the ISP pipeline will let the user process images before passing them to the other modules in the ISP. The input of this module is a Bayer and non-Bayer format and the output will be Bayer. The module has direct access to DDR and the result of processing can be provided to the high-level operating system (HLOS) in real time.

Snapdragon 820 has two ISPs and customized image processing modules can be included on both pipelines. In other words, if you connect two cameras to the 820, you can have separate, customized image processing for each camera. If a user wants to use an HVX module for both customized image processing modules, they can use vector with 512 bits. Using a customized image processing module will put Hexagon in a specific mode that will assign two specific hardware threads for each customized image processing module (Figure 2).

[Figure 2 | HVX module and HT]

Processing structure

For our purpose, we used two cameras with the same resolution aligned in a fixed fixture. That means we know how much the overlap between the two cameras is. We use the processing pipeline as it is shown in Figure 3.

[Figure 3 | Processing blocks]

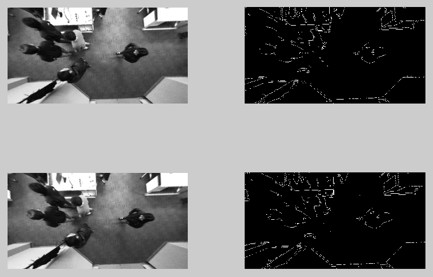

Using a neural networks solution (Figure 1), we can have the edge detection process on both camera images at the same time by using HVX module. The edge-detected image on one the cameras is passed to the other one using a feedback pointer. Then, these two images are processed using SAD algorithm. The result will be in DDR for sharing with HLOS. As mentioned before, the location and alignment of cameras are fixed and can be used to find the overlap section of two captured images. If this is not the case, then we need to add overlap detection module to the whole process.

Experiment

Having two cameras fixed in a static frame, we can see that the frames have a fixed overlap.

[Figure 4 | View of two cameras with a fix overlap and related edge detection with NN]

Using SAD algorithm on two related frames and converting numbers to gray scale with interpolations, we reach the result shown in Figure 5.

[Figure 5 | Disparity result]

With this algorithm, we can reach 30 FPS for full HD video while the video is available to HLOS with no interruption.

Intrinsyc