Why GPUs are Great for Training, but NOT for Inferencing

March 23, 2022

Blog

In the tech industry, you can hardly have a conversation without someone mentioning inference, artificial intelligence (AI), and machine learning (ML). However, it’s important to note that while all these terms are interconnected, they are also vastly different.

In this article, we’ll explain the fundamental differences and highlight how it’s important, particularly in edge and embedded systems, to use tensor processing-based edge AI technology. Tensor processing technology (TPUs) offer much more efficient and cost-effective performance than graphics processing unit (GPU)-based solutions. We’ll also give some example use cases of where you can expect to find edge AI solutions in the future.

The Basics of ML and Inferencing

ML refers to the approach of using representative data to train a model to enable a machine to learn how to perform a task. This process can be highly computationally intensive with each new piece of training data generating trillions of operations. The iterative nature of the training process combined with the very large training data sets needed to achieve high accuracy drives a requirement for extremely high-performance floating-point processing. ML training is best implemented as data center infrastructure that can be amortized across many different customers to justify the high capital and operating expense.

Inferencing is the process of using a trained model to produce a probable match for a new piece of data relative to all the representative data that the model was trained on. Inferencing is designed to provide quick answers that can be arrived at in milliseconds. Examples of inferencing include speech recognition, real-time language translation, machine vision, and advertising insertion optimization decisions. While inferencing requires a small fraction of the processing power of training, it is still well beyond the processing provided by traditional central processing unit (CPU)-based systems, especially for computer vision applications. That is the reason so many companies are turning to tensor-based acceleration solutions either on the SoC as IP or as an in-system accelerator to achieve the sub-second response times needed at the edge. The reality is that taking a minute or even a few seconds to process an image in a vision system is not very useful. Industrial vision systems are looking for milli-second level processing speeds.

Separating Training from Inference

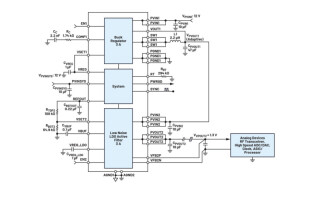

Deploying the same hardware used in training for the inference workloads is likely to mean over-provisioning the inference machines with both accelerator and CPU hardware. The GPU solutions that have been developed for ML over the last decade are not necessarily the best solutions for the deployment of ML inferencing technology in volume. The below graphic is a perfect example showing a TPU accelerator versus a GPU accelerator. It’s apparent that the TPU accelerator is able to offer much lower power, lower cost, and higher efficiency than the GPU-based AGX solution, while still offering a compelling level of performance for inferencing applications.

Another important consideration when approaching ML training and inferencing solutions is the software environments. Today, there are many popular libraries being used such as CUDA for NVIDIA GPUs, ML frameworks such as TensorFlow and PyTorch, optimized cross platform model libraries such as Keras, and many more. These tool sets are critical to the development and training of ML models, but when it comes to inferencing applications, there is a much different and smaller set of software tools that are required.

Inferencing tool sets are focused on running the model on a target platform. Inference tools support the porting of a trained model to the platform. This may include some operator conversions, quantization, and host integration services, but is a considerably simpler set of functions required for model development and training.

Inference tools benefit from starting with a standard representation of the model. The Open Neural Network Exchange (ONNX) is a standard format for representing ML models. As its name implies, it is an open standard and is managed as a Linux Foundation project. Technology such as ONNX allows for a decoupling of training and inferencing systems and provides the freedom for developers to choose different optimized platforms for training and for inferencing systems.

Sample Vision Applications

As ML and inferencing processor technology continues to advance and evolve, the applications are endless. Below are just a few of the places you will likely see this technology in the future.

- Edge servers in factories, hospitals, retail stores, financial institutions, and other enterprises. For example, in the industrial space, AI can be used to help manage inventories, detect defects or even predict defects before they happen. In the retail space it can enable capabilities such as pose estimation that uses computer vision technology to detect and analyze human posture. The data gained from this analysis could enable brick and mortar retailers to better understand human behaviors and foot traffic in their stores, enabling them to set up the store in a way that maximizes their retail sales and customer satisfaction.

- High accuracy/quality imaging for applications such as robotics, industrial automation/inspection, medical imaging, scientific imaging, cameras for surveillance and object recognition, photonics, and more. As an example, machine learning approaches have been demonstrated to detect cancer by processing digital X-rays. The process for doing this is to develop an ML model designed to process X-ray images typically using semantic segmentation algorithms trained to detect cancer for example. In the training phase, images of cancers as identified by expert radiologists are used to train the network to understand what is not cancer, what is cancer, and what different types of cancers look like. The more ML models are trained, the better they get at maximizing correct diagnoses and minimizing incorrect diagnoses. What this means is that machine learning depends on both smart model design but just as importantly on a massive quantity (like hundreds of thousands to millions) of well-curated data examples, where the cancer has been expertly identified. The inferencing application would then be applied as a second set of eyes to help radiologists in reviewing X-rays and highlighting areas of interest for the radiologist to focus on.

- Smart Shopping Carts – Several companies are developing and deploying smart shopping systems that are able to recognize products, not by their UPC bar code, but by the visual appearance of the package itself. This capability allows shoppers to just drop products in the card or place it on the checkout system without needed to look for the UPC code and scanning it with the UPC laser scanner. This technology makes the shopping process more accurate, quicker and more convenient.

Making the Right Decision

Companies need to evaluate all the solutions available today and make the best selection based on their particular use case. They also can’t just assume that all AI solutions are best implemented on GPU devices, as TPU based solutions offer much higher processing efficiency and lower silicon utilization which drives both lower power dissipation and lower cost.