The Evolution of Edge AI and Cloud Computing

April 27, 2021

Story

Before 2019, most IoT systems consisted of ultra-low-power wireless sensor nodes, often battery powered, that provided sensing capabilities.

Their primary purpose was to send telemetry data up to the cloud for big data processing. Almost every company was doing this to enable proofs of concept (PoCs) as the Internet of Things became the new buzzword and market trend. Cloud service providers have nice dashboards that present the data in attractive graphs to help support the PoCs. The main reason for the PoCs was to convince stakeholders to invest in IoT and prove return on investment so larger projects could be funded.

As this ecosystem scaled up, it became clear that there was the potential for sending too much data back and forth through the cloud. This could clog up the bandwidth pipeline and make it harder to get data in and out of the cloud quickly enough. This would also create latency that is at minimum annoying, and in the extreme could break applications that need guaranteed throughput.

Despite major improvements in bandwidth and transfer speeds promised from standards such as 5G and Wi-Fi 6E, the massive number of IoT nodes communicating with the cloud has exploded. In addition to the sheer number of devices, costs are also increasing. Early IoT infrastructure and platform investments need to be monetized and, as more nodes are added, the infrastructure needs to be both scalable and profitable.

Around 2019, the idea of edge computing became a popular solution. Edge computing implements more advanced processing within the local sensor network. This minimizes the amount of data that needs to go through the gateway to the cloud and back. This directly reduces costs as well as frees up bandwidth for additional nodes if needed. Having less data transferred per node also has the potential to reduce the number of gateways needed to collect and transfer the data to the cloud.

Another technology trend that enhances edge computing is artificial intelligence (AI). Early AI services were primarily cloud based. As innovations were made and algorithms became more efficient, AI has been moving to end nodes very rapidly and its use is becoming standard practice. A notable example is the Amazon Alexa voice assistant. The detection and wakeup upon hearing the trigger word, “Alexa”, is a familiar use of edge AI. In this case, the trigger word detection is done locally in the system’s microcontroller (MCU). After it successfully triggers, the rest of the command goes to the cloud over a Wi-Fi network where the most demanding AI processing is done. This way, the wakeup latency is minimized for the best possible user experience.

Besides addressing the bandwidth and cost concerns, edge AI processing also brings additional benefits to the application. For example, in predictive maintenance, small sensors can be added to electric motors to measure temperature and vibration. A trained AI model can very effectively predict when the motor has or will have a bad bearing or an overload condition. Getting this early warning is critical to servicing the motor before it completely fails. This predictive maintenance greatly reduces line down time because the equipment is proactively serviced before complete failure. This offers tremendous cost savings and minimal loss of efficiency. As Benjamin Franklin said, “An ounce of prevention is worth a pound of cure”.

As more sensors are added, gateways can also get overwhelmed with the telemetry data from the local sensor network. In this case, there are two choices to alleviate this data and network congestion. More gateways can be added or more edge processing can be pushed to the end nodes.

The idea of pushing more processing to end nodes, typically sensors, is underway and gaining momentum rapidly. The end nodes are typically running on power in the mW range and sleep most of the time with power in the µW range. They also have limited processing capability, driven by the low power and cost requirements for end nodes. In other words, they are very resource constrained.

For example, a typical sensor node can be controlled by an MCU as simple as an 8-bit processor with 64 kB of flash and 8 kB of RAM with clock speeds around 20 MHz. Alternatively, the MCU may be as complex as an Arm Cortex-M4F processor with 2 MB flash and 512 kB RAM with clock speeds around 200 MHz.

Adding edge processing to resource-constrained end node devices is very challenging and requires innovation and optimization at both the hardware and software levels. Nevertheless, since end nodes will be in the system anyway, it is economical to add as much edge processing power as possible.

As a summary of the evolution of edge processing, it is clear that end nodes will continue to become smarter, but they have to also continue to respect their low resource requirements for cost and power. Edge processing will remain prevalent as will cloud processing. Having options to assign functionality to the right location allows systems to be optimized for each application and ensures the best performance and lowest cost. Distributing hardware and software resources efficiently is the key to balancing competing performance and cost objectives. The proper balance minimizes data transfer to the cloud, minimizes the number of gateways, and adds as much capability to sensor or end nodes as possible.

Example of an Ultra-Low-Power Edge Sensor Node

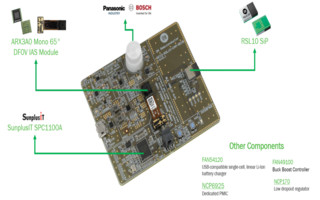

The RSL10 Smart Shot Camera, developed by ON Semiconductor, addresses these various challenges with a design that is ready to be used as-is or easily added to an application. The event-triggered, AI-ready imaging platform uses a number of key components developed by ON Semiconductor and ecosystem partners to give engineering teams an easy way to access the power of AI-enabled object detection and recognition in a low-power format.

The technique adopted is to use the tiny but powerful ARX3A0 CMOS image sensor to capture a single image frame, which is uploaded to a cloud service for processing. Before sending, the image is processed and compressed by an image sensor processor (ISP) from Sunplus Innovation Technology. After applying JPEG compression, the image data is much faster to transfer over a Bluetooth Low Energy (BLE) communication network to a gateway or cell phone (a companion app is also available). The ISP is a good example of local (end node) edge processing. The image is compressed locally and less data is sent over the air to the cloud, which provides clear power and networking cost savings resulting from reduced airtime.

The ISP has been specially designed for ultra-low power operation, consuming just 3.2 mW when active. It can also be configured to provide some on-sensor pre-processing that can further reduce active power, such as setting a region of interest. This allows the sensor to remain in a low power mode until an object or movement is detected in the region of interest.

Further processing and BLE communication is provided by the fully-certified RSL10 System-in-Package (RSL10 SIP), also from ON Semiconductor. This device offers industry-leading low power operation and short time to market.

(Figure 1. RSL10 Smart Shot Camera contains all of the components required for a rapidly deployable edge processing node.)

As can be seen in Figure 1, the board includes several sensors for triggering activity. These include a motion sensor, accelerometer, and environmental sensor. Once triggered, the board can send an image over BLE to a smartphone where the companion app can then upload it to a cloud service, such as the Amazon Rekognition service. The cloud services implements deep learning machine vision algorithms. In the case of the RSL10 Smart Shot Camera, the cloud service is setup to do object detection. Once an image is processed, the smartphone app is updated with what the algorithm detected along with its probability of success. These types of cloud-based services are extremely accurate because they literally have billions of images to train the machine vision algorithm.

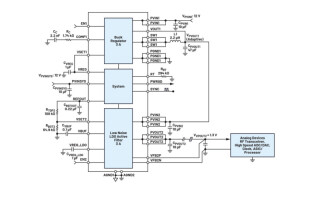

(Figure 2. The RSL10 Smart Shot Camera’s image sensor processor allows images to be sent over Bluetooth Low Energy (BLE) to a smartphone and on to the cloud where computer vision algorithms can be applied for object detection.)

Conclusion

As discussed, the IoT is changing and becoming more optimized to enable massive and cost-effective scaling. New connectivity technologies continue to be developed to help address power, bandwidth, and capacity concerns. AI continues to evolve and become more capable as well as more efficient, enabling it to move to the edge and even end nodes. The IoT is growing and adapting to reflect continued growth and prepare for future growth.

The RSL10 Smart Shot Camera from ON Semiconductor is a modern example of how to successfully address the main issues with putting AI at the edge: power, bandwidth, cost, and latency.