Billions of IoT devices in the next decade may be a pipe dream

May 11, 2015

You need to first understand what the IoT is before you can start the process of designing attached devices. The IoT hype machine has been in full swi...

You need to first understand what the IoT is before you can start the process of designing attached devices.

The IoT hype machine has been in full swing for a couple of years, and it’s understandable – the potential presented by a world with billions of smart networked devices is hard to fully comprehend. Improvements in sensor manufacturing, including new materials, nanotechnology, and microelectromechanical systems (MEMS) are presenting new sensing and monitoring capabilities never before envisioned. Advancements in semiconductor manufacturing with transistors (nodes) at 14 nanometers are allowing the production of smaller and faster processors and components that are far less energy hungry, and cheaper, too.

One question unanswered at this point is whether the widespread prediction of billions of IoT devices deployed in the next several years is practical, affordable, and for device makers, profitable. Let’s look at the some of the requirements and design decisions you may face when building an IoT device.

Cloud computing has completely upended how we look at data processing and usage. The availability of scalable, expandable IT architectures has prompted the assumption that networks can be (and ought to be) 100 percent reliable, and that network faults are acceptable and manageable.

The electronics industry is predicting the deployment of billions of low-cost Internet-enabled devices in the next five to ten years. But the design and production costs of these low-cost devices must be in a range that makes a reasonable business case. We are living in a capitalistic world after all. If our products don’t make a profit, we shouldn’t make them!

There are many mistaken ideas about IoT. Some think that a sensor that sends its data over a network constitutes IoT. This is a portion – a very small portion – of the IoT, but it’s not the whole picture. Others might think that just because a smartphone is used to remotely control a device or to view data it constitutes IoT, and that’s not true. A smartphone is only one component of an IoT system, and not even a mandatory one.

Let’s look at the design of the smallest possible IoT device, a sensor, which monitors one physical variable, such as temperature or pressure. What equipment and software does this small sensing device need? It would need an electronic component that measures the analogic variable. And it would need a digital-to-analog converter, either built into the sensor itself or via a separate chip (which is a feature commonly integrated into the microprocessor).

The device might be required to pre-process the sensor data before transmitting it. The processor in such a device can be very simple, usually an 8- or 16-bit processor. The software running on this processor will often be written using a bare metal approach. It will be a single-threaded application, a super loop.

The performance requirements are usually quite low, so low that such a device could run on a coin battery for years. And the device’s cost would be fairly low, often less than a dollar apiece.

This is exactly what we were looking for – a low-cost, low-power device that we can manufacture by the billions, so we can cover the entire planet! Game over! Actually, the game is far from over. And this is exactly where things start to become interesting. First, the processor’s cost closely tied to the amount of built-in flash and RAM it includes. The more memory, the larger the chip, and this is true even for chips made at 14 nm. But the design requirements for IoT devices increasingly result in larger quantities of code, and thus require chips with more RAM.

The second issues are time and cost. New features make IoT devices more complex, and more complexity requires more development time. Even if the popularity of open-source software is making managers (falsely) believe that software is free, a software engineer’s time certainly is not.

Power-management considerations

Battery-operated devices typically make use of some sort of power-management software. This isn’t always a simple thing to implement. Some microcontroller user manuals have more than 400 pages describing all of the chip’s different power modes. To take advantage of all these modes, your application development must be smarter, and device testing becomes more complex, adding costs to your development project.

Now that the data in the sensor device is available, we need to transmit it. This is where the Sensor Network (SN) and Wireless Sensor Network (WSN) come into play. With ZigBee and 6LowPAN, for example, the amount of code required in the device (a node) to run these protocol stacks is about 100 KB to 120 KB, depending on whether the node is an end device or a coordinator/router. An additional 6 KB to 8 KB of RAM for the protocol stack is needed, depending on the buffer strategy used.

This code and RAM space requirement can be met on some of today’s the 8-/16-bit processors. Because of the higher demand for flash memory and RAM, the cost of such processors is higher. Data throughput and frequency transfers are always assumed and often are low on a WSN.

Remember, the I in IoT stands for Internet. This means that, at some point in the system, Internet Protocol is involved; maybe not the public Internet (the web), but certainly a private IP network (an Intranet).

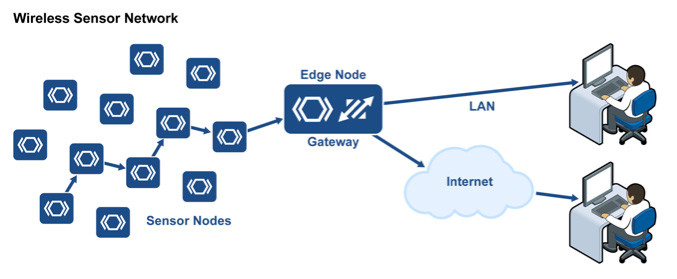

The purpose of the sensor network code is to move the sensor data through the local network. To get the data onto a private IP network (or the Internet), we need an additional device – an edge node or gateway. This device bridges the sensor network to an IP network. It needs an Ethernet controller and Ethernet connector for TCP/IP and the protocol stack, or a Wi-Fi radio with the appropriate protocol stack.

A fully IETF RFC compliant TCP/IP stack requires about 100 KB of code space. A single Ethernet buffer needs 1.5 KB. If performance isn’t an issue, your device can get by with only a few kilobytes for transmit/receive buffers. If better performance is required, then at least 30 KB to 60 KB will be required. And this doesn’t include memory for the protocol stack’s internal data structures.

The relatively hefty memory demands for an IP-enabled device calls for increased hardware requirements. There are two approaches to deal with these requirements:

- Use an external module to handle the Ethernet/Wi-Fi communication

- Use a more powerful (and expensive) 32-bit processor in your device

Either choice has an impact on the power consumption and the manufacturing cost of the device.

Designing with two processors is a good solution, especially when you can put one or both processors in deep sleep mode to save power. But the communication processor must be a 32-bit chip.

Consider that the memory required for a TCP/IP stack can be between 50 KB and 100 KB in code size, and a Wi-Fi stack is the sum of the TCP/IP stack plus the Wi-Fi network management, authentication, and encryption code, which is as large as the TCP/IP stack. This makes a Wi-Fi stack twice the size of a TCP/IP stack. So if decent performance is required, you’ll need a fast processor and a fair amount of RAM.

Running complex protocol stacks such as TCP/IP can be done in a single-threaded and polling fashion, but this isn’t very CPU efficient. And if you have more tasks for the communication processor in addition to running the protocol stack, then a real-time kernel is a better option than a single-threaded super-loop. This way, the software for the communication processor can be scalable, modular, and reliable, and at the same time allow for easier team development, product support, and maintenance.

IPv4/IPv6 protocols

We already know that IPv4 doesn’t provide a sufficient number of public IP addresses to sustain the Internet growth and the number of predicted IoT devices in the next few years. IoT devices must start using IPv6. Although the earliest IoT systems are using, and can continue to use IPv4, for sustained growth, IPv6 is mandatory.

Google is keeping up-to-date statistics about IPv6 usage in the Internet. This means that IoT devices need to run both IPv4 and IPv6 for the foreseeable future. Dual protocol stacks increase the code size and RAM requirements for the hardware design. And testing two protocols adds time to the device design and testing phases, which ultimately results in higher costs.

IoT devices touch upon our lives both in industry and our homes. But one aspect of the IoT that hasn’t gotten enough attention is security, both for your equipment and your customer’s data. Much has been written about the need for security and confidentiality in the IoT, but there have been relatively few solutions presented.

Data encryption

The first layer of protection is data encryption, which is vital when data is transmitted to distant servers or mobile devices. Data encryption is a well-understood process. It’s done every time we use a secure connection for online banking or shopping. The same technologies can be applied to IoT devices.

Encryption takes place in the SSL/TLS (Secure Socket Layer/Transport Layer Security) layer in the TCP/IP stack. Encryption algorithms are sophisticated mathematical equations that take data and cypher it. The code size for the SSL/TLS layer can be around 30 KB to 50 KB, and the required RAM size is even greater. The security is applied per socket. On PCs, laptops, smartphones, and tablets, it’s common to use as much as 100 KB of RAM per socket. On a meticulously designed embedded SSL/TLS stack, it can be reduced to about 45 KB per socket. Another way to reduce the per IP socket RAM requirement is to use proprietary cypher protocols both on the device and on the cloud server assuming that customer protocol negotiation between the client (device) and server is in place.

You can reduce some of the code overhead for SSL/TLS by using hardwired encryption peripherals that are built into the chip. The encryption is performed more efficiently, but the additional cost is moved from software to hardware and requires some custom software work to adapt the SSL/TLS stack to the hardware peripherals.

How do you ensure that the IoT device itself isn’t a vulnerable spot on your network? Techniques for hacking an IoT device can include:

- Gaining unauthorized access

- Reverse engineering a device with the intent to copy, steal or destroy a commercial advantage

- Violating the integrity of the device by injecting code. Such code might not be malevolent in and of itself, but it may illegally enhance a program’s functionality, or otherwise subverting a program so it performs new and unadvertised functions that the owner or user would not approve of

Anti-tampering technology is not only about preventing piracy; it’s even more important in IoT for policy enforcement (that is, ensuring the device always does what it’s supposed to do). There are plenty of anti-tampering protection mechanisms, including encryption wrappers, code obfuscation, guarding, and watermarking/fingerprinting. In addition, there are many hardware techniques.

Software is best protected when several protection techniques are combined. This is where anti-tampering methods such as Trusted Execution Environments (TEE) come to play. And some vendors provide their own proprietary technologies, such as Intel’s TXT (Trusted Execution Technology), AMD’s SVM (Secure Virtual Machine), and ARM’s TrustZone.

Other silicon vendors provide hypervisor-based TEE, or make use of ISO standards such as the Trusted Platform Module (TPM), which are designed as fixed-function devices with a predefined feature set. Unfortunately, All these features demand more transistors and more software, leading to bigger chips and higher software development costs.

Secure boot

Secure boot is the ability for a device to validate and load trusted software. It’s an important element of an anti-tampering feature. With secure boot, the device’s firmware is encrypted. At boot-up, the firmware is decrypted, loaded into flash memory or RAM, and executed. If the firmware decryption or loading step fails, the system rolls back to a previous version of the firmware that’s known to be secure. Of course, to store both the encrypted and decrypted firmware, the system needs more non-volatile memory and additional software and RAM for the decryption step and boot loader.

Remote firmware upgrade is an extension of the secure boot feature. This function is often referred as FOTA (Firmware Over The Air). When a new version of the firmware is available, the device must either receive a push notification from the server, or poll the server itself for a firmware update. Once the device knows that a new firmware update is available, it must download it, store it, and execute steps similar to the ones described above for secure boot.

In addition to the extra non-volatile memory required to store the new firmware, the device must implement the necessary communication stack and physical layers to connect to a network and back-end services. And even more software is required to manage the secure firmware upgrade process.

Safety-critical certification

Many industries have imposed some level of safety-critical certification for products. Certification is performed to meet certain international standards, such as IEC 61508 for industrial control software and IEC 62304 for medical device software.

Building a product for safety-critical industries means creating a strict development process where each deliverable can be measured, documented, and traced. The software/firmware may not be larger, but the development cost is definitively higher.

All industries, including consumer products, are now paying attention to memory faults caused by cosmic radiation. This kind of damage, caused by a stray radiation particle, is referred to as a single event upset. This phenomenon is a concern for airplanes, satellites and spacecraft, as high-altitude vehicles suffer a greater exposure to cosmic rays. The silicon ICs used in these products need to be radiation hardened so a single event upset can be prevented.

This phenomenon can also happen on the ground, but because the Earth’s atmosphere is thicker near the surface, the probability of such an event is quite low. This is about to change with the sudden flood of IoT devices that are predicted to be deployed over the next decade. With billions of active devices, the probability of a single event upset increases.

To protect against this problem, industries are starting to impose IEC 60730, a safety standard for electronic controls in household appliances. Devices that follow this standard must be able to detect a memory fault, and stop the device safely, or otherwise correct the problem. Again, dedicating more transistors and more software to this problem adds more cost to your product’s development.

Device lifecycle management

Lifecycle management is another feature that can increase the complexity of your development process. Your device will need to cooperate with whatever device lifecycle requirements put in place by the product manufacturer or the system user. To be clear, device lifecycle management includes:

- Registering the device (authorizing its operation in the system)

- Configuring the device (setting operating parameters and product options)

- Automatically reporting problems and failures

- Updating the device’s firmware to address bugs or provide feature enhancement

- Providing data for billing purposes (and other usage statistics)

There are no existing standards for device lifecycle management. Multiple solutions exist, but they are all proprietary. Needless to say, we’re not speaking of lightweight software. Even if it will reduce operating costs, implementing lifecycle management will add development and maintenance costs to any system design.

In some industries, such as the consumer market, standards are still struggling to emerge. Rather, these industries are suffering from standards fragmentation. Multiple standards are being proposed in the consumer market. For example, Apple is proposing its proprietary HomeKit; Google, with its NEST acquisition, is developing something called Thread; Intel has formed the Open Interconnect Consortium (OIC); and Qualcomm spun-off its AllJoyn initiative to become AllSeen.

If you are a manufacturer of consumer products, you may have to decide which proprietary standard you’ll align your business and products with. And there are situations where you may need to use more than one standard. For example, a gateway that must be able to connect to devices from multiple vendors may need to support any or all of these standards.

As you can see, IoT is not a one-size-fits-all technology. It’s more like a box of Legos, with blocks representing local and wireless networks, sensors, energy harvesting, batteries, microcontrollers, Internet protocols, web technologies, databases, analytics, 3G/4G smartphones, tablets, and more. And these pieces can be used in various ways.

To meet the prediction of billions IoT devices deployed in the next decade, the cost per device must be in a range that will make a profitable business case. Otherwise, it’ll never happen. Yet at the same time, consumers are asking for more rugged, secure and flexible devices. These additional requirements can’t be an afterthought. IoT is real, but these demanding constraints could slow down its adoption and implementation. The billions of devices will happen, but perhaps it’ll take more than ten years.