Solving Critical Challenges of Autonomous Driving

October 12, 2018

Blog

The technology of autonomous driving is building upon specific driver-assistance functions like adaptive cruise control or collision avoidance, temporary supervised auto-pilot, to fully self-driving.

The technology of “autonomous driving” is building upon specific driver-assistance functions like adaptive cruise control or collision avoidance, temporary supervised auto-pilot, to fully self-driving vehicles capable of completing journeys from beginning to end with no human intervention at all.

The five levels of autonomy (figure 1) defined by the American Society of Automotive Engineers (SAE) help to visualize the increasing extent of machine involvement in driving vehicles of today and – more importantly, perhaps – the future.

Moving up through these various levels, increasingly accurate situational awareness is clearly required. Tasks like object recognition, and spatial awareness and positioning are core human skills, and the challenge of replicating these in a machine is only now beginning to be overcome thanks to Moore’s Law progression in chip processing capabilities versus cost and power consumption.

With the benefit of this progress, however, the highest level of fully self-driving vehicles is within reach. Indeed, some high-profile autonomous car projects have already accomplished over 1 million miles of fully autonomous driving, with only a handful of accidents recorded.

There is a strong case for self-driving vehicles on the grounds of improving road safety. Since over 80 percent of road-traffic accidents result from human error, removing human decision-making could potentially lower the accident rate. The challenge is to eliminate human errors, without introducing unacceptable levels of machine errors. Tolerance of machine errors, in this most sacred of human activities, will be extremely low.

Autonomous driving systems at the higher SAE levels must be developed within a framework that ensures safety of the design and all its elements. The automotive safety standard ISO 26262 provides a framework to which any autonomous-systems development must adhere, by defining a set of Automotive Safety Integrity Levels (ASIL) and associated allowable failure rates. Moreover, the autonomous driving systems that make it onto the road in the vehicles of the future will also be subject to a range of harsh environments from tropical heat to arctic cold, with engine heat, thermal cycling, high vibration, shock, humidity, dust, and more, to challenge operability and reliability. Hence, in addition to being extremely powerful, fast, and energy efficient, the compute platforms underpinning the autonomous driving revolution must also satisfy qualification and certification to and beyond AEC-Q100.

Multiple Sensing Modalities – the Eyes, Ears, and More, of the System

In the same way that a human driver relies on visual information as well as sounds, forces on the body, and even sense of smell to control the vehicle in various contexts and anticipate events, autonomous systems rely on multiple sensing modalities to provide the raw data for decision making. The machine has an advantage over the human, in that many more senses can be provided to augment cameras working in the visual spectrum and inertial sensors analogous to the human sense of balance, movement, and positioning. These include radar, lidar, ultrasonic, infrared, GPS, and also wireless V2X data that can provide awareness of hazards that are out of sight.

At the nexus of all of these sensing modalities – the eyes, ears, and more, of the system - is a Centralised Processing Module (CPM) that must make real-time decisions based on all the data streaming continuously from the various channels. It is the capabilities of this module that define the limits of the system’s capabilities, in terms of the autonomous-driving modes that can be performed safely.

Processing System Architecture

Figure 2 illustrates the functions needed, for the CPM to convert the raw sensor data into safe and appropriate autonomous-driving decisions.

Among the functions shown, the Data Aggregation, Pre-Processing and Distribution (DAPD) block interfaces with the different sensor modalities to perform basic processing, routing and switching of information between processing units and accelerators within the processing unit.

High-performance serial processing performs data extraction, sensor fusion and high-level decision-making based upon its inputs. In some applications, neural networks will be implemented within the high-performance serial processing.

Safety processing performs real-time processing and vehicle control based upon the detected environment provided by pre-processing in the DAPD device and results from the neural network acceleration and high-performance serial processing elements.

Creating the CPM presents the designer with several interfacing, scalability, compliance and performance challenges. And, of course, tight SWaP-C constraints (Size, Weight and Power – Cost) always come with the automotive territory. Moreover, applicable standards, best-practices, and machine-learning algorithms can be expected to evolve quickly as the higher-level autonomous-driving systems move through serious prototyping and early deployment.

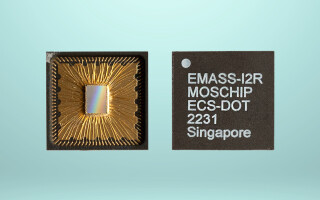

To overcome these challenges, we can conceive an integrated and programmable solution that handles not only the interfacing, pre-processing and routing capabilities of the DAPD, but that also integrates certain safety processing features and potentially neural-network machine-learning functionality within the same silicon or as a standalone hardware accelerator device. This opportunity is driving the emergence of a new class of automotive-grade programmable Multi-Processor System on Chip (MPSoC) ICs, which leverage more than Moore technologies to integrate multiple application processor cores and real-time processor cores, with highly parallelised programmable logic, and high-bandwidth industry-standard interfaces, all on the same silicon.

With two lockstep cores, a real-time processing unit (RPU) can be capable of handling safety-critical functionality up to ASIL-C. To provide the necessary functional safety, such an RPU also needs to be able to reduce, detect, and mitigate single random failures, including both hardware and single-event induced failures.

Safety processors are required to interact directly with vehicle controls such as steering, acceleration and braking. Clearly, the avoidance of processing errors in this subsystem is critical. In addition to lockstep capability of the cores, further important mitigation provisions include support for Error Correction Codes (ECC) on caches and memories to ensure the integrity of both the application programme and data required to implement the autonomous vehicle control. Built-in Self-Test (BIST) during Power-On is also important to ensure the underlying hardware has no faults prior to operation, and the ability to functionally isolate memory and peripherals within the device if found to be defective.

As far as interfacing with sensor modalities throughout the vehicle are concerned, typical high-speed interfaces will include MIPI, JESD204B, LVDS and Gigabit Ethernet (GigE) for high-bandwidth interfaces such as cameras, RADAR and LIDAR. MPSoC ICs typically contain flexible I/Os that can be configured to connect directly with MIPI, LVDS and Giga Bit Serial Links, leaving the higher levels of the protocols to be implemented in the programmable logic fabric, often using IP cores. Interfaces such as CAN, SPI, I2C and UARTs are already established within the automotive application space, and are provided as ready to use functions within the processing system of devices.

Implementing applicable protocols within the programmable-logic fabric of an MPSoC device also enables any revisions or updates of standards to be easily incorporated along with providing flexibility as to the number of specific sensor interfaces supported within a solution.

Machine Learning to Drive

Humans acquire knowledge and experience each time they drive, and so will the autonomous machines we train to drive future generations of vehicles. Machine learning will have an important role in autonomous driving, to aid decision-making in situations that are similar – although rarely, if ever, identical – to any encountered before.

Image recognition is currently one of the most important uses of machine-learning neural networks in autonomous driving, to help vehicles recognise important objects such as other vehicles, pedestrians, vulnerable road users like cyclists, junctions, crossings, road signs, objects such as obstructions in the road, and other hazards. While the vehicle is moving, getting the results of these analyses is time-critical and will drive demand for low-power embedded real-time machine-learning systems.

Frames/second/Watt is a critical figure of merit for embedded neural network implementations. Lightweight, on-board neural networks can be implemented in FPGAs using high level languages like C, C++ and OpenCL, which enables functionality to be moved seamlessly from the processor system to the programmable logic. Builders of neural networks have found the highly parallel nature of FPGA processing in programmable logic, combined with the absence of external-memory bottlenecks, allows greater determinism and responsiveness than alternate architectures such as graphics processor units (GPUs).

Other work to increase frames/second/Watt is showing that later-generation models like Spiking Neural Networks (SNNs) can increase throughput and reduce power consumption compared to more traditional models such as convolutional CNNs. Multiple SNN cores have been successfully instantiated in FPGAs, to handle large numbers of video channels, at high frames per second, and low power consumption of just a few Watts.

Conclusion

The roadmap to fully autonomous self-driving vehicles is mature, and has moved through several levels of autonomy that begin with basic driver-assistance systems. Safety, obviously, is critical, but a viable compute platform must also tackle extreme SWaP-C challenges. Highly parallel and reprogrammable processing in Multi-Processor System-on-Chip ICs can meet the needs of the next generation of auto-pilot and fully self-driving systems. With suitable safety processors also integrated, capable of meeting industry safety standards such as ISO 26262, these devices are able to satisfy key system requirements at the highest levels of autonomous driving.