Issues of trust in silicon

August 03, 2016

Trust in modern silicon is something most of us take for granted. Many techniques are used to build security features into silicon, including Adding s...

Trust in modern silicon is something most of us take for granted. Many techniques are used to build security features into silicon, including

- Adding security extensions, such as ARM’s TrustZone, to processors to allow them to run in secure and non-secure modes,

- Implementing Trusted Platform Modules (TPMs) designed to secure hardware by integrating cryptographic keys, and

- Incorporating a physically unclonable function (PUF) that provides a unique challenge-response mechanism dependent on the complex and variable nature of the silicon material used to manufacture the system on chip (SoC).

All of these design principles and primitives are necessary to ensure that the resulting silicon has the tools in place for software to build a trusted computing environment. Many have been implemented and the Department of Defense (DoD) requires all systems to include a TPM [1].

[Figure 1 | An SoC design team built several Trusted Computing elements into this design (figure courtesy of Microsemi)]

[Figure 1 | An SoC design team built several Trusted Computing elements into this design (figure courtesy of Microsemi)]

These security primitives and platforms are a great start. However, simply including a security intellectual property (IP) block or primitive is not enough to make the system or silicon secure. Security vulnerabilities can still exist within an SoC design due to several design and verification issues that often arise due to varying levels of trust and expertise.

Modern SoCs are composed of hundreds of IP blocks and many come from a myriad of different vendors. Should a design team trust all of them? Probably not. Were these blocks designed to avoid all known security pitfalls? Definitely not.

Most problems stem from either IP vendors who have different levels of trust or those who are concerned with functionality above all else.

Issue 1: Vastly different levels of trust

Some IP blocks are commonplace, such as a standard USB controller. If it appears to behave like a USB controller, then there shouldn’t be any concerns. However, when they are purchased from an IP vendor with a relatively low trust level, how can a design team expect it to behave well with the rest of the system?

Other IP blocks serve extremely crucial roles – as an in-house developed crypto key manager, for example. Including both of these blocks of IP in an SoC can introduce elusive security issues not considered during the architectural design. For example, does the USB controller have access to the crypto key manager over the SoC interconnect? One would hope not, but many SoC vendors do not know because they do not perform the appropriate security verification at each stage of the design cycle.

Silicon security needs to be done by design and security verification needs to be done at every stage of the development, especially as the various IP blocks are integrated together.

Issue 2: Vendors concerned with functionality only

Blindly trusting test vectors provided by a third-party IP vendor is a poor approach to building a secure system. When IP vendors develop their test suites, their only concern is functionality. They solely strive to ensure that the logic and accuracy of their IP blocks matches the functional specification.

For instance, IP vendors check whether their AES-256 core can correctly perform encryption in the right number of cycles. However, correctly performing encryption is completely orthogonal to ensuring the AES-256 core is devoid of security flaws such as leaking the secret key to any unintended outputs.

In other words, IP vendors do not strive to meet a security specification. It is naive to trust that the test vectors provided by the IP vendor will exercise all critical security aspects. Therefore, it is incumbent upon the SoC design team to perform security verification on all IP blocks to ensure silicon security.

The way an IP block is integrated into an SoC can easily exercise aspects of the block that will violate the security of the system. Assume a cryptokey manager provides an interface for storing the key. A debug state of the key manager could potentially let someone read out the key. What if this security vulnerability was not covered in the test vectors provided by the IP vendor? Again, the burden is on the SoC design team to perform security verification on all IP blocks to ensure silicon security.

The design for security solution

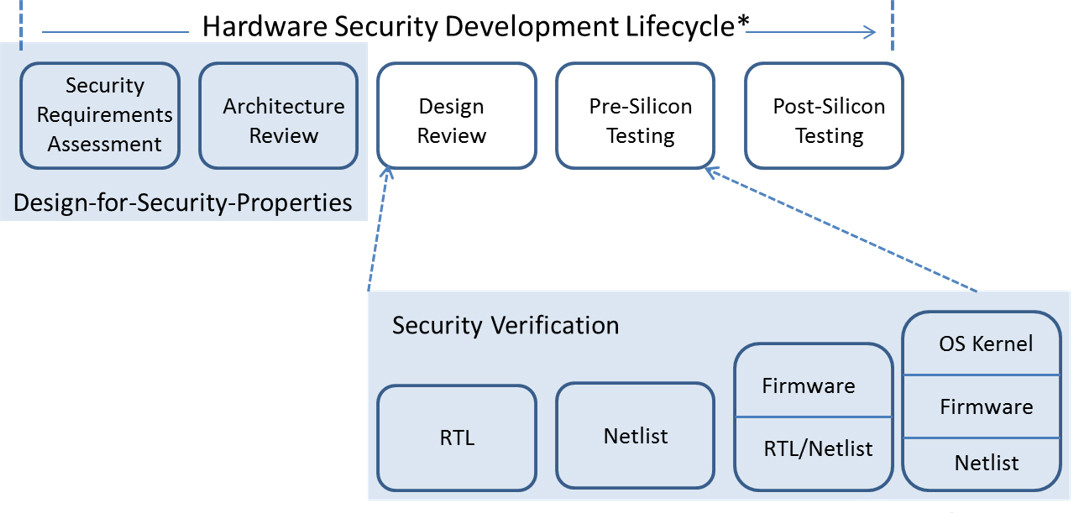

These types of vulnerabilities can be avoided if SoC design teams implement a Design For Security (DFS) methodology by identifying and verifying silicon security properties throughout the hardware design lifecycle, from architecture discussions to tapeout. This entails adequately defining the desired security properties during the SoC’s architectural design.

Next, these security properties must be verified at both the individual IP block level, as well as the integration of all IP blocks in the design with appropriate security verification software.

[Figure 2 | A DFS methodology encompasses verifying security at every stage of the hardware design lifecycle]

[Figure 2 | A DFS methodology encompasses verifying security at every stage of the hardware design lifecycle]

Summary

Every block, whether simple or complex, must be verified for security to ensure a secure system. When not utilizing DFS, security vulnerabilities such as the ones cited may still exist, regardless if the design team uses security primitives such as PUFs, TPMs and Crypto processors.

As SoC design teams implement DFS methods within their register transfer level (RTL) design flows, security vulnerabilities can be addressed and eradicated from architecture to tapeout, ensuring a fully secure system. We can go back to trusting modern silicon.

References:

1. Lemos, Robert. “U.S. Army Requires Trusted Computing.” Security Focus. July 28, 2006. http://www.securityfocus.com/brief/265.

2. Khattri, Hareesh, Narasimha Kumar V Mangipudi, and Salvador Mandujano. “HSDL: A Security Development Lifecycle for Hardware Technologies.” 2012 IEEE International Symposium on Hardware-Oriented Security and Trust, 2012.

Tortuga Logic, Inc.