ISO 26262 - A static analysis tools perspective

April 14, 2015

In 2011, ISO 26262 was published as the international standard for the development of safety-critical automotive electronic systems, and its use is in...

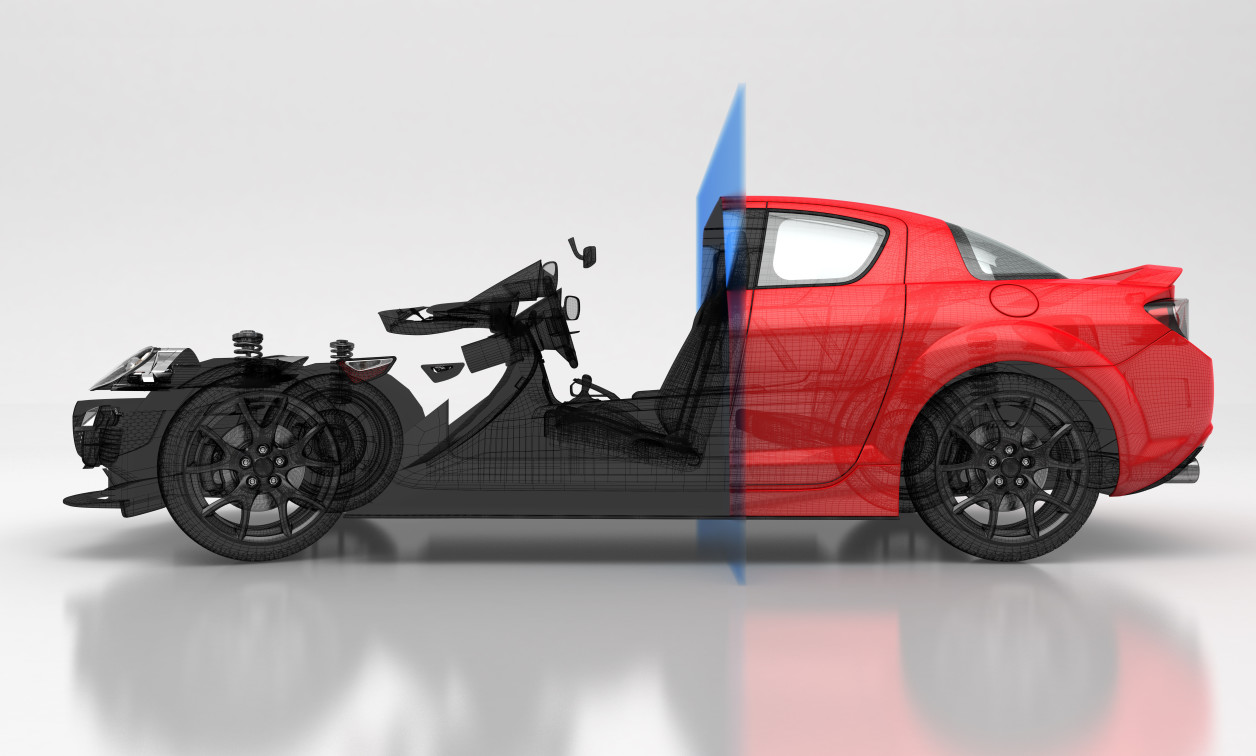

Cars are becoming more and more dependent on electronics, and manufacturers are increasingly turning to innovations in electronics and software to give them a competitive advantage. A modern luxury automobile might have up to 100 different embedded processors running over 100 million lines of code. With so much software, it is essentially impossible to get it all right. Nowadays it is estimated that 60-70 percent of automobile recalls involve software.

The risk of software defects in automotive systems is acute because software controls many safety-critical aspects of the vehicle, including the throttle, the transmission, and the brakes. Thus, the development of safety-critical automotive software requires strict standards and a meticulous approach.

In 2011, ISO 26262 was published as the international standard for the development of safety-critical automotive electronic systems, and its use is increasing worldwide. It is based on the more generic IEC 61508 safety standard, but introduces automotive-specific refinements. This article attempts to summarize some of the key aspects of the standard that are most important to embedded software developers. It starts by giving a brief introduction to ISO 26262 and describes how tools in general can be used to help with certification. Next there is a description of how tools get to be qualified for use in certification. The key takeaway, discussed in the last section, is that much of the standard concerns the development of software that is robust, and that when used appropriately, advanced static analysis tools offer one of the most economical ways of achieving a high level of software robustness.

ISO 26262

This document uses the term ISO 26262 or “the standard” to mean the part of ISO 26262 that addresses software; specifically ISO 26262-6:2011 Road vehicles — Functional safety — Part 6: Product development at the software level.

ISO 26262 is a risk-based standard. While it acknowledges that it is impossible to reduce the risk to zero, it requires that risks be qualitatively assessed and that measures be taken to reduce them to “as low as reasonably practicable” (ALARP).

The vocabulary used in ISO 26262 speaks of faults, errors, and failures, where “a fault can manifest as an error … and the error can ultimately cause a failure.” The most important term to understand is the “Automotive Safety Integrity Level,” or ASIL. ASIL is a classification of the risk of an element of the electronics. Level D represents the parts with the highest risk, and level A the lowest (an additional label QM is used when the risk is deemed to be less than ASIL A). The level is assigned by following an assessment process applied to the hazards. Each potentially hazardous event is classified by the severity of injuries it might cause, where SIL0 indicates no injuries, and SIL3 represents threat to life. The other important factors in the assessment are the exposure, which ranges from E0 (extremely low probability) to E4 (very likely), and the controllability, which indicates if the driver can take action to prevent injury (C0 means easy, C3 means difficult or impossible).

The ASIL is determined by taking these factors into account together. Clearly a hazard that has high scores on severity, exposure, and controllability will be assigned ASIL D. However, a hazard with a high severity may be assigned ASIL A if there is a very low probability of it happening.

Once an ASIL is determined for a hazard, it is then inherited by the safety goals that mitigate the hazard and the safety requirements that derive from it. The ASIL then dictates the minimum testing requirements for the software.

Using tools to help with certification

ISO 26262 is explicit in acknowledging that software validation tools are essential for meeting the testing requirements. Consequently, the standard requires that such tools are themselves qualified. The important thing to note is that the tools used may themselves be imperfect and that those imperfections may undermine the safety case for the entire system, so the standard requires an assessment of the tool confidence level (TCL). This is determined by assessing two things:

- The possibility that the testing requirements will not be met if the testing tool fails, and

- The probability that the failure of the tool can be detected by its users

The value can range from TCL1 (lowest confidence) to TCL3 (highest). Two factors are used to determine the TCL: the tool impact (TI) and the tool error detection (TD).

The tool impact is used to describe the consequences of a potential malfunction in the tool. TI1 is used when it is arguable that malfunction in the tool itself either a) cannot lead to failure in the system, and b) cannot prevent a failure from being detected. TI2 is used otherwise.

TD is the assessment of confidence in how well the tool can report a malfunction, where TD1 means lowest confidence and TD3 represents highest confidence.

Once the tool confidence and error detection has been assessed, the TCL can be determined based on the information in Table 1.

|

For example, consider a fictional dynamic analysis tool Cov that can be used to measure the code coverage achieved by running the software on a given test suite. Let’s say that the ASIL is D (the most risky) and that the corresponding safety requirement is that the test suite must achieve 100 percent branch coverage.

The tool impact of Cov is clearly TI2 because if it fails to report the coverage metric correctly, the code coverage requirement may not be satisfied.

Judging the tool error detection can be tricky. Let’s say that Cov has a shortcoming where it can sometimes fail to instrument some portions of code and so cannot measure the code coverage for those parts, and that instead of raising an alert in that case it simply omits that information from its report. An inexperienced user of the tool might fail to notice the omission, so the coverage goal might be inadvertently missed. In such a case the tool might be judged to be TD2.

Consequently, by reference to Table 1, the TCL for Cov will be TCL2.

Tool qualification

ISO 26262 states that tools that have TCL1 do not need to be further qualified, but that TCL2 and TCL3 both require that at least one tool qualification method must be used before it is correct to claim that the tool is qualified. The four qualification methods are:

- Increased confidence from use, meaning that there is a track record of the tool having been used successfully on similar projects.

- Evaluation of the tool development process, meaning that the tool developers have been careful to follow a high-quality software development process.

- Validation of the software tool, meaning that the tool has been subjected to stringent validation protocols.

- Development in accordance with a safety standard, meaning that a rigorous development standard (such as ISO 26262 itself) was followed during the development.

For the higher ASIL levels, the latter two methods are highly recommended because they yield high confidence.

Tool qualification is not trivial, and is usually beyond the scope of most embedded software projects themselves. Fortunately, most tool vendors who care about ISO 26262 have done the work to get the qualification. The best case is for the vendors to have received a qualification by an independent agency that specializes in the area. An independent certification means that the vendor has successfully satisfied fairly demanding requirements, and not only gives the tool users confidence in its quality but also saves those users a great deal of certification effort.

Of course to get ISO 26262 certification for a given embedded system, the developer must make their own case, so they must gather together all the relevant material, including all the tool qualifications. Most vendors will supply a “certification kit” that contains this material in an easy to use form.

Static analysis tools and ISO 26262

ISO 26262 does not require the use of any particular class of tool; instead it specifies properties of the code that should hold, but usually just lists methods that can be used to provide evidence that the code does in fact exhibit those properties. In many cases it is abundantly clear that the use of tools is practically mandatory (e.g., it would be essentially impossible to gather accurate code coverage metrics without the use of a modern tool). In other cases it may not be clear that tools are available that can help.

The section of the standard to which static analysis tools are most relevant is Section 8: Software unit design and implementation. One of the most obvious applications of static analysis tools is to validate that the software conforms to coding standards, as specified by section 5.4.7 and required by section 8.4.3.d. The best such example in the automotive sector is of course MISRA C. Finding such violations has been a strength of static analysis tools since the early versions were first invented.

The latest generation of static analysis tools remain capable of finding coding standard violations, but they additionally are capable of much more. Their primary purpose is to find serious programming errors, such as those that can cause a program to crash or to stray into undefined behavior. These tools have been designed to be flexible enough that it is possible to use them to satisfy some of the other requirements of ISO 26262.

One of the most pertinent sections is 8.4.4, which lists “Design principles for software unit design and implementation at the source code level …” to achieve properties including correct order of execution, robustness, and even code readability. Section 8.4.5 goes on to list techniques that should be used to verify that the software complies. One of the techniques listed is static code analysis.

ISO 26262 invokes the notion of “robustness” at several points. In the context of software unit design and implementation, robustness is deemed to include prevention of “implausible values, execution errors, division by zero, and errors in data flow and control flow” (8.4.4). Robustness features noted for software unit testing (9.4.2) and software integration and testing (10.4.3) include “absence of inaccessible software, effective error detection, and handling.”

One reasonable way to summarize the robustness requirement is that the number of preventable serious software defects should be minimized. This is precisely the greatest strength of advanced static analysis tools.

Roughly speaking, these tools start by parsing the code, and then creating a high-fidelity model of the entire program. The analyses that find defects proceed by traversing the model in various ways searching for anomalies. The most sophisticated algorithms do a kind of simulation of the execution of the program by exploring paths through the code, except that instead of using concrete values to represent the state of execution, they use a set of abstract equations that model program properties.

The most important property of these tools is that they can explore far more execution paths than can ever be explored by even the most sophisticated testing regimen. Even if the code has been tested to a stringent coverage requirement such as full MCDC (the most demanding specified by ISO 26262), that does not come close to guaranteeing full path coverage. In principle advanced static analysis tools can guarantee 100 percent path coverage (although in practice, sacrifices are often made to achieve reasonable scalability and precision). Consequently they can and do find defects that are missed by traditional testing. Furthermore, they can be applied very early in the development cycle, even before unit tests have been written. As everyone knows, the earlier a bug is found, the cheaper it is to fix, which makes these tools economical to use to achieve high robustness.

Conclusion

The motivation of any safety-critical coding standard, especially ISO 26262, is to reduce the risk of software failures to ALARP – as low as reasonably practicable. This means not just adhering to the minimum requirements of the standard, but also applying best practice techniques to achieve high quality. The properties of advanced static analysis tools make them invaluable not just for following the letter of the law, but also for keeping to its spirit – the development of robust and reliable software.

GrammaTech, Inc.