Defining (artificial) intelligence at the edge for IoT systems

March 22, 2017

Merriam-Webster defines intelligence as “the ability to learn or understand or to deal with new or trying situations.” By that definition, “intelligent systems” on the Internet of Things (IoT)...

Merriam-Webster defines intelligence as “the ability to learn or understand or to deal with new or trying situations.” By that definition, “intelligent systems” on the Internet of Things (IoT) require much more than just an Internet connection.

As more IoT devices come online, machine intelligence will increasingly be classified as a system’s ability to ingest information from its ambient environment via sensor inputs before taking immediate, autonomous action – even in the event that the readings associated with those inputs have not been encountered before or exist outside of the parameters defined by programmers during system development. To date, this type of intelligence has largely been the domain of computer vision (CV) applications such as object recognition, where a growing number of machine learning development libraries like Caffe, TensorFlow, and Torch have enabled the creation of models used in the training and inferencing of deep and convolutional neural networks (DNNs/CNNs). While artificial intelligence (AI) technology is thus far still in its infancy, its benefits for advanced driver assistance systems (ADAS), collaborative robots (cobots), sense-and-avoid drones, and a host of other embedded applications are obvious.

However tantalizing, though, machine learning does come with drawbacks for embedded systems. First and foremost, the computational resources required to run a CNN/DNN for applications such as object recognition have principally only been available in the data center. While arguments have been made that embedded devices could simply transmit findings to the cloud where large-footprint machine learning algorithm processing could occur to circumvent the restrictions of using server-class infrastructure at the edge, the latency requirements and transmission costs of doing so are essentially non-starters for many of the safety-critical applications mentioned previously.

Likewise related to the origins of AI technology is the fact that most, if not all, machine learning frameworks were developed to run on data center infrastructure. As a result, the software and tools required to create CNNs/DNNs for embedded targets have been lacking. In the embedded machine learning sense, this has meant that intricate knowledge of both embedded processing platforms and neural network creation has been a prerequisite for bringing AI to the embedded edge – a luxury most organizations do not have or is extremely time consuming if they do.

Thanks to embedded silicon vendors, this paradigm is set to shift.

Embedded processor vendors enable AI at the edge through silicon, software

2014 saw NVIDIA take what is widely regarded as one of the first forays into embedded machine learning with its Jetson TK1 development kit, and since then the company has continued to drive down the power consumption and development hurdles of super computing at the edge. Recently the Jetson ecosystem was extended again with the release of the Jetson TX2 module, an embedded board based on NVIDIA’s Pascal architecture that adds a dual-core Denver heterogeneous multiprocessor (HMP) along with double the memory and storage performance and capacity.

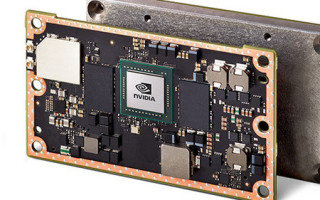

[Figure 1 | NVIDIA's Jetson TX2 combines a 256 CUDA-core Pascal GPU with Dual Denver 2/2 MB L2 heterogeneous multiprocessor (HMP) and quad-core ARM A57 or A52 MB L2 CPU complex to deliver twice the performance or energy efficiency of the Jetson TX1 module in edge machine learning applications.]

The real achievement of the Jetson TX2 is in its energy efficiency, however, as Deepu Talla, Vice President and General Manager of NVIDIA’s Tegra product line explained in a March 6th press briefing that the TX2 provides a 2x improvement in that area over the TX1 (or less than 7.5 watts of total power consumption). An update of the JetPack software development kit (SDK) to version 3.0 also enables faster development of neural network-based systems through support for the Linux Kernel 4.4 and Multimedia API 27.1, which form the basis for exploiting TensorRT and cuDNN (deep learning); VisionWorks and OpenCV (computer vision); Vulkan and OpenGL (graphics); and libargus (media) libraries.

[Figure 2 | NVIDIA's optimized JetPack 3.0 software development kit (SDK) enables twice the system performance while integrating support for the Linux kernel 4.4 and Multimedia API 27.1 in the development of high-performance deep learning, computer vision, graphics, and media applications.]

But going even deeper into edge of AI is a processor vendor well known to the embedded space, as Xilinx officially unveiled its reVISION software stack on March 13th. reVISION addresses machine learning application, algorithm, and platform development for engineers working with Xilinx Zynq architectures, but does so in an extensible way that allows software developers to leverage C, C++, or OpenCL languages in an Eclipse-based environment when programming in frameworks such as Caffe or OpenCV. The stack also supports neural networks like AlexNet, FCN, GoogLeNet, SqueezeNet, and SSD.

[Figure 3 | Xilinx' reVISION software stack accelerates the development of computer vision and machine learning applications by integrating Caffe and OpenVX application libraries, OpenCV and CNN/DNN algorithm development, and a flexible hardware architecture based on Zynq and MPSoC silicon.]

Taken cumulatively, this approach not only reduces required hardware expertise, but allows, for example, leveraging Caffe-generated .prototxt files to configure an ARM-based software scheduler that drives CNN inference accelerators pre-optimized to run on Xilinx programmable logic. According to Steve Glaser, Senior Vice President of Corporate Strategy at Xilinx, combining the reVISION software stack with FPGA technology enables low latency sensor inferencing and control down to 8-bit resolutions in a power envelope as low as the mid-3 watt range. In addition, the FPGA fabric helps future proof designs as interface, algorithm, and system requirements evolve over time.

[Figure 4 | In addition to a 6x images per second per watt improvement in machine learning inferencing, 40x better frames per second per watt in computer vision processing, and 1/5th the latency of GPU-based computing architectures, Xilinx officials say the use of FPGA fabric helps future proof machine learning designs from changing interfaces and algorithms.]

Based on the power consumption benchmarks from both companies, AI technology is quickly approaching deeply embedded levels. Be on the lookout: True intelligence at the edge is upon us.