Having a greener cloud will pay dividends

April 09, 2015

Power efficiency in the cloud is a big deal, particularly as the number of servers grows at a staggering pace. The broadband and smartphone revolution...

Power efficiency in the cloud is a big deal, particularly as the number of servers grows at a staggering pace.

The broadband and smartphone revolutions have dramatically changed how we consume and interact with information in our professional and private lives. Storage and computing servers, colloquially known as the “cloud,” increasingly host most of the major applications. They use fast Internet connections and powerful resources to consolidate and process far-flung data and respond rapidly to users.

This paradigm holds the promise of instant and always-on response, ubiquitous access, and a lower capital investment for clients. Cloud servers are an indispensable part of our daily activities whether it’s for consumer applications like Netflix, Facebook, or Siri, or the industrial machine-to-machine (M2M) activity that’s the basis of the Internet of Things (IoT), or enterprise solutions, such as SAP and SalesForce.

Driven by these forces, server capacity has grown at a remarkable rate. Cloud computing is estimated to have grown from essentially zero in 2006 – when Amazon launched its Amazon Web Services (AWS) platform – to a $58 billion market in 2014. According to Forrester Research, the public cloud market (excluding captive data centers from Amazon, Google, and others) is predicted to reach $191 billion by the end of the decade. By way of comparison, the WSTS forecasts the entire semiconductor industry to reach $333 billion in 2014 worldwide sales.

Cloud computing revenues include the effects of lower prices as the technology matures and competition increases. Even more amazing has been the cloud’s growth in raw compute horsepower. By one estimate, AWS, the leading cloud service provider, has deployed more than 2.8 million servers worldwide.

Architecturally, servers have also evolved significantly by moving to hyperscale and multi-threaded structures, and processor cores have significantly improved their raw throughput. Additional design techniques, such as being able to change clock speed, supply voltage on the fly, and vary the number of simultaneous operational cores, have all enabled greater dynamic response to computing load requirements. Nevertheless, they’ve also added significant complexity to power delivery requirements.

Growing consumption

Most relevant is the electrical energy consumed by these data centers; as the installed base of servers has mushroomed, so has their energy consumption. Obtaining exact company numbers is difficult, but one exemplary data center designed to consume 3 MW of power hosts more than 8000 servers. Google estimated in 2011 that its data centers alone continuously draw about 260 MW, which is about 25 percent of the output of a state-of-the-art nuclear power plant. To ease power transmission challenges, the vast majority of the data centers worldwide are located close to massive power sources like the Columbia River hydroelectric power system.

Worldwide 2010 estimates stated that data centers used between 1 percent and 1.5 percent of global electricity consumption, which is equivalent to Brazil’s total consumption. In the U.S., data centers use closer to 2 percent of the country’s total electricity consumed, which is equal to New Jersey’s total consumption. Essentially, data centers had added another New Jersey to the U.S. electric grid by the end of 2010, and the load continues to grow.

This massive power consumption growth has a significant economic impact. While processor cores may offer greater processing capability, as they follow Moore’s Law and architecture improvements, their voltages haven’t scaled fast enough to lower overall power consumption.

Data centers primarily use power in two ways: to supply the needed energy for the computers and to cool them sufficiently, keeping systems within their operational range. Consequently, small improvements in the delivery of power have a leveraged beneficial impact on the bottom line. In addition to the reduced power bill, efficient power delivery can increase data center capacity for a given budget. This is an important consideration given that the installed capacity is continuing its brisk pace of double-digit annual growth.

Power distribution

State-of-the-art data center power distribution consists of a series of stepped-down voltages followed by point-of-load power delivery. Raw efficiency is the greatest challenge, but power systems can also add capabilities in a few different ways to enable lower energy consumption.

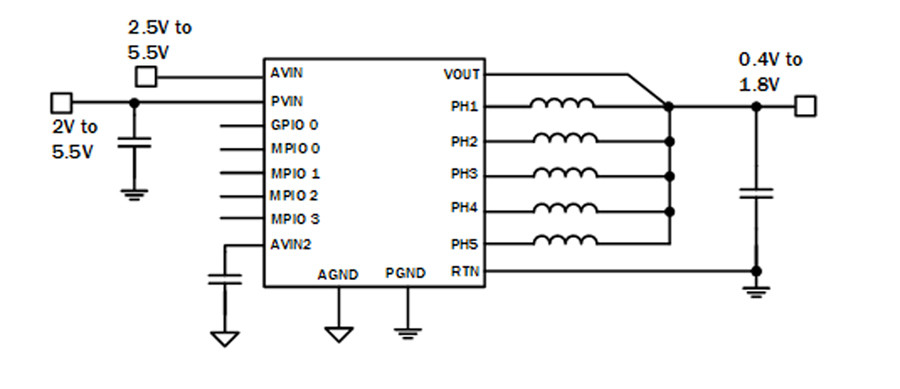

The latest generation of infrastructure power converters supports multi-phase operation, and maintains the power delivered from light- to peak-load at a close to peak efficiency. They achieve close to peak efficiency by parallelizing multiple power delivery phases, which the controller modulates based on the power draw requirement. Figure 1 shows a typical multi-phase system, where the regulator modulates each inductor to provide a variable amount of current.

Multi-phase operation improves on a key shortcoming of single-phase converters, where the efficiency peaks at a nominal load, but drops at high loads. Figure 2 shows how a multi-phase system can intelligently select the number of phases depending on the load. Flattening the efficiency curve across a larger portion of the operating range frees data center planners from choosing between optimizing for typical and maximum workloads.

Dividing processor cores into “power islands” enables parts of the system to shut down when they aren’t in use. Power delivery systems now comply with the need to provide multiple simultaneous rails.

Today’s processor cores communicate anticipated power capacity to the power converters over a digital bus (typically PMBus). The changing load can be a function of additional cores coming online, varying processor clock speed, or the knowledge that the software is processing a particularly intensive sequence. With insight into the expected loading, the controllers can maximize the efficiency across the load curve. The ability to manage the duration and level of energy consumed provides another big advantage to service providers: they can use the duration and intensity of system activity to calculate the billings for each process.

Green lining

There is a green lining to this “cloud.” Even if it uses a significant amount of energy, the efficient consolidation of computing tasks in the cloud – which employs some of the most powerful computation systems ever to exist – still holds the promise of lowering the overall amount of energy required to perform tasks across discrete systems. The datacenter’s evolving power delivery requirements offer semiconductor manufacturers significant opportunities to innovate and create new breakthroughs.