Embedded vision for Industry 4.0

June 16, 2017

Blog

Machine vision is a term commonly used for embedded vision systems operating in an industrial context. Consequently, machine vision addresses a wide range of applications, from optical...

Machine vision is a term commonly used for embedded vision systems operating in an industrial context. Consequently, machine vision addresses a wide range of applications, from optical inspection of manufactured goods such as semiconductor and solar panel manufacturing to postal and parcel sorting, industrial X-rays, and food packaging.

There are several high-level trends facing most machine vision applications today:

- Ubiquity of machine vision

- Embedding intelligence from machine learning at the edge

- Open, high-level languages and frameworks

- Multi-level, multi-factor security

The ubiquity of machine vision is closely coupled to what has been termed Industry 4.0 by some leading industry figures. This is due to the economic benefits of including machine vision within the manufacturing flow, as it allows for new assembly and inspection methodologies coupled with improved logistical and workflow tracking. This added capability brings with it not only increased yields but also reduced throughput times, which offset the cost of an embedded vision implementation. The rapid adoption of machine vision in manufacturing brings with it several technology challenges that are driving demand for increased frame rate, resolution, wider connectivity bandwidth, smaller form factors, and lower cost as applications increase.

Along with widespread adoption of the underlying technology, the applications executed on machine vision systems are increasingly complicated. For example, it is a requirement to detect defects in manufacturing pieces, recognize objects, and make decisions based upon what is recognized. Embedding intelligence at the edge within the camera itself means that users are not required to transfer the image to a high–performance, centralized processing node. This embedded intelligence, however, requires the processing capacity to be able to perform the algorithm at the required frame rate, resolution, and latency within the machine vision system.

To aid the development and implementation of intelligence at the edge, machine vision developers leverage open-source, high-level languages and frameworks such as OpenCV and OpenVX for image processing, and Caffe for machine intelligence. These frameworks enable algorithm development time to be greatly reduced and allow the developer to focus on value-added, market-distinguishing activities.

Due to the nature of the applications, safety and security are inherently required to ensure there is no potential for injury or malicious external interaction. This becomes very important when machine vision systems are connected externally to the Internet. Achieving this solution requires that safety and security architectures be addressed at a system level, but device selection must also be considered to ensure there is support for aspects such as secure configuration and reliable design flows.

These high-level drivers are having a considerable effect on the machine vision market and driving a new architectural paradigm.

Architectural choices

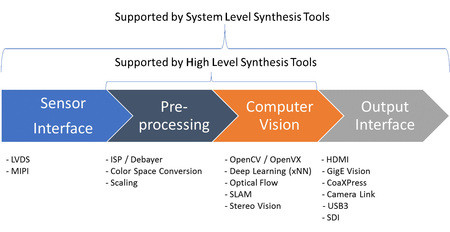

Considering the high-level architecture of a machine vision system, the following system elements have been identified:

- Lens and lighting solution to allow the image to be focused and illuminated

- Camera to capture the image

- High-speed interconnect to transfer the image

- PC with frame grabber and application software or storage

Until recently, the traditional approach has been to develop machine vision systems around a PC-based architecture due to the ease of deployment, large ecosystem of software vendors, and low cost of implementation. However, there are also several disadvantages to PC architectures, namely their low performance when compared to alternative solutions, large footprint, higher power dissipation, and the fact that they are not easily scalable as demanded by Industry 4.0.

A second approach is to use GPU acceleration within a PC-based architecture; this application has similar advantages to that of a PC-based application. While this does enable faster prototyping of the machine vision algorithms using high-level frameworks, it also comes with an increase in power dissipation over and above that required by a PC-based solution and requires expertise in the implementation of the algorithms on the GPU. Further, it still presents issues regarding scalability.

With both a PC- and GPU-based approach it is difficult to implement processing at the edge, although there is a final architectural approach that enables such a move and provides considerable benefits in the size, weight, power, and cost (SWaP-C) of the machine vision system.

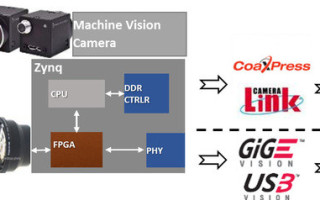

The final architectural approach is a fully integrated system that implements the machine vision system using programmable logic and traditional embedded processors like the Xilinx All Programmable Zynq-7000 SoC and Zynq UltraScale+ MPSoC. Such a highly integrated solution offers the highest performance per watt (PPW) relative to the alternatives. These devices combine high-performance ARM Cortex-A53 or Cortex-A9 processors with programmable logic, enabling the development of a custom image processing pipeline to implement the machine vision algorithms required to enable intelligence at the edge.

[Figure 1 | Example Zynq-based scalable solution showing major functional blocks.]

Use of an All Programmable SoC-based solution also provides for a scalable system and enables the ability to quickly and easily implement heterogeneous and homogenous sensor fusion and any-to-any connectivity.

Connectivity

Another significant benefit of a fully integrated solution that uses an All Programmable SoC is the flexibility of I/O. This means that it is possible to implement any-to-any connectivity. For the designer, it provides the ability to:

- Interface to any image sensor

- Interface to any camera

- Interface to any downstream display or communication protocol

- Convert from one standard to another

These capabilities provide the developer with both the ability to achieve current interface requirements, and to implement future upgrades and introduce new interface standards as a product roadmap evolves. For example, depending on changes at the physical layer, it may be possible to implement revisions to a currently used protocol by remotely updating the programming file.

Within the machine vision market, the connectivity standards GigE Vision, Camera Link, and USB3 are very popular. Of course, many of these interfaces are also available within IP libraries that reduce the required development time. This provides a platform that can be leveraged using a higher level of abstraction. This higher level of abstraction is required to achieve the maximum benefit from an SoC that facilitates the use of industry standard frameworks and libraries.

Design entry

The reVISION acceleration stack enables developers to implement computer vision and machine learning techniques, using industry standard frameworks and libraries. reVISION supports developments targeting the All Programmable Zynq-7000 SoC and Zynq UltraScale+ MPSoC. To enable this, reVISION combines a wide range of resources enabling platform, application, and algorithm development. As such, the stack is aligned into three distinct levels:

1. Platform Development – This is the lowest level of the stack and is the one on which the remaining layers of the stack are built. This layer provides the platform definition for the SDSoC tool, and it is at this level where the any-to-any connectivity is implemented.

2. Algorithm Development – The middle layer of the stack provides support implementing the algorithms required. This layer also provides support for acceleration of both image processing and machine learning inference engines into the programmable logic.

3. Application Development – The highest layer of the stack provides support for industry standard frameworks. These allow for the development of the application, which leverages the platform and algorithm development layers.

Both the algorithm and application levels of the stack are designed to support both a traditional image processing flow and a machine learning flow. Within the algorithm layer there is support provided for the development of image processing algorithms using the OpenCV library. This includes the ability to accelerate a significant number of OpenCV functions (including the OpenVX core subset) into the programmable logic. To support machine learning, the algorithm development layer provides several predefined hardware functions that can be placed within the PL to implement a machine learning inference engine. These image processing algorithms and machine learning inference engines are then accessed and used by the application development layer to create the final application and provide support for high-level frameworks like OpenVX and Caffe.

Using reVISION to implement embedded vision or machine learning application provides reduced development time for users, along with a more responsive, power efficient and flexible solution. When compared to GPU-based solutions, using an All Programmable Zynq-7000 SoC or Zynq UltraScale+ MPSoC provides a solution that offers one-fifth the latency along with a performance and power efficiency increase of up to 6X images/sec/watt for machine learning applications and up to 42X frames/sec/watt for embedded vision.

Seeing the future of industry

Machine vision is one of the most rapidly growing and demanding markets in the embedded vision sphere. As such there are several challenges facing the machine vision developer. These technical challenges are best addressed by embedding intelligence at the edge and developing a fully integrated system using the All Programmable Zynq-7000 SoC or Zynq UltraScale+ MPSoC.

Developing within the reVISION acceleration stack, these devices enable the implementation of solutions that are more responsive, deterministic, provide higher throughput, and are more power efficient, while allowing developers to work with industry standard frameworks and libraries.

Xilinx

www.xilinx.com/products/design-tools/embedded-vision-zone.html

LinkedIn: www.linkedin.com/company-beta/3543/

Facebook: www.facebook.com/XilinxInc

Google+: plus.google.com/+Xilinx

YouTube: www.youtube.com/user/XilinxInc