Addressing the software challenges of ADAS and automated driving

June 20, 2016

A modern OS can provide a variety of tools to help developers build robust, safety-certifiable systems with off-the-shelf software. A century ago, rem...

A modern OS can provide a variety of tools to help developers build robust, safety-certifiable systems with off-the-shelf software.

A century ago, removing the horse and replacing it with a motor was a major innovation. Electric starters, automatic transmissions, and radios were next. As electronics became ubiquitous, we saw intermittent wipers, fuel injection, and engine performance enhancements and monitoring. And now, microprocessors and software are fueling the evolution of advanced driver assistance systems (ADAS) to help the driver drive the car.

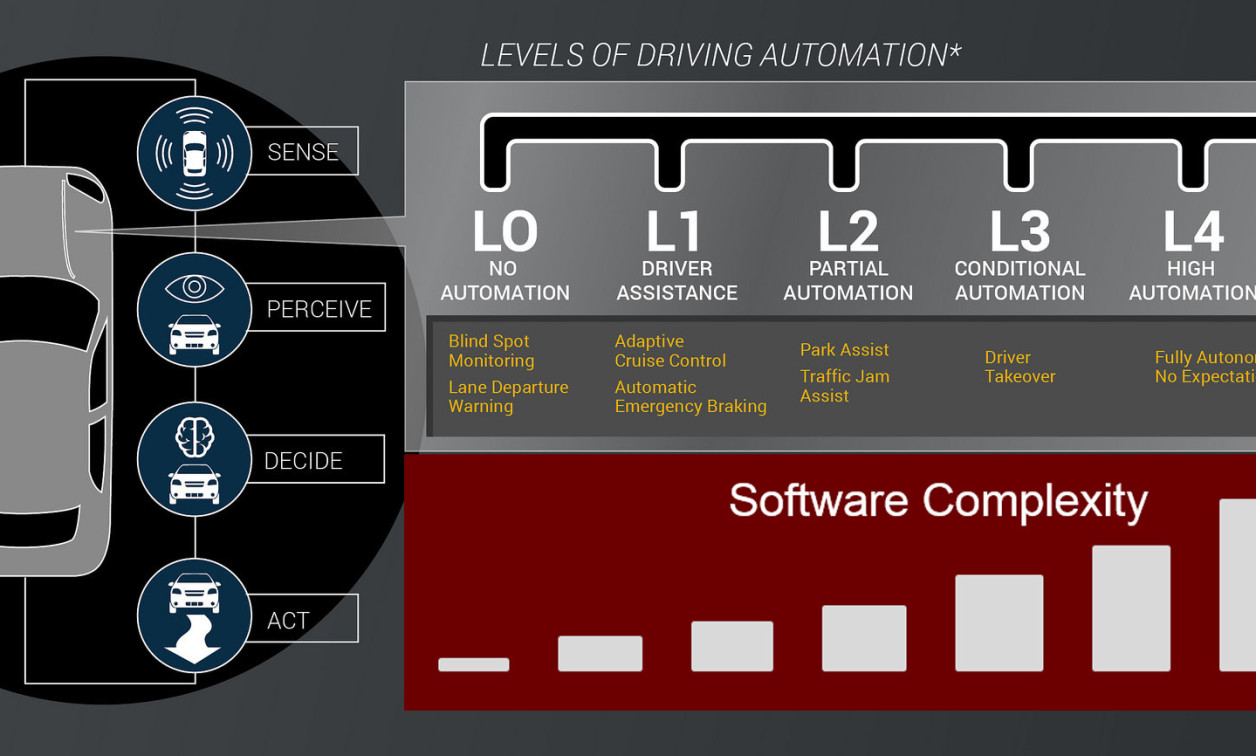

ADAS spans from passive, to rudimentary, to active, and finally to fully autonomous control. In passive ADAS, the system warns the driver of a situation, but it is up to the driver to take action (Figure 1). For example, a backup camera equipped with a motion detector can, if it detects someone behind the car, sound an alarm, but the driver must still apply the brakes. An example of rudimentary ADAS control is adaptive cruise control, which can maintain the speed set by the driver and automatically adjust it to ensure a safe distance from vehicles up ahead. An example of active ADAS is automated lane keeping, which not only warns the driver, but actively steers the vehicle to keep it in the lane. Ultimately, such technologies will converge in the autonomous vehicle — a car able to drive itself without human intervention.

[Figure 1 | Passive ADAS systems, such as backup cameras, still require driver intervention.]

Software

ADAS systems combine hardware (for example, cameras, radar, exotic laser sighting systems) with associated device driver and control software. Additional layers of software provide the communication between modules and implement the system’s “high level” functionality, such as avoiding the pedestrian behind the car while it’s in reverse or coordinating the interaction of the braking system, steering system, and cameras to implement the lane keeping feature. Autonomous cars will require even more software.

To deliver a safe and reliable product, developers must use certified components, methods, and tools, but they must also deal with cost constraints. Thus, to develop the product on time and on budget, it makes sense to use commercial off-the-shelf (COTS) software. This approach amortizes the development, testing, and certification costs of the software across multiple OEMs. Likewise, commonality of hardware platforms lends strength to the COTS argument – the difference between a high-end luxury car and a low-end utility car can be stark, but they may both use the same underlying vision system. Once an OEM gains familiarity with a hardware platform, they can integrate it into multiple models and lines to reduce costs.

Freedom from interference

The automotive software industry is following in the footsteps of other industries that rely on software and have stringent safety requirements, including medical, rail, and nuclear. Each has processes and certifications to help ensure consistency, quality, and above all, safety. For automotive, the main standard is ISO 26262, “Road Vehicles – Functional Safety.” It’s modeled after IEC 61508 “Functional Safety of Electrical/Electronic/Programmable Electronic Safety-Related Systems,” but deals specifically with the automotive sector and addresses the entire software lifecycle.

A key concept in ISO 26262 is freedom from interference. Simply put, one component cannot interfere with the operations of another. For example, if a system includes both a media player and a rear-view camera, the media player should never interfere with the operation of the camera, even though the two may share the same LCD panel.

The goal of modern OS platforms is to mitigate all forms of interference between software processes. For example, the OS can use the memory management unit (MMU) to ensure that each process runs in a private, hardware-enforced address space that only that process can access. This technique prevents memory interference. But other forms of interference can still occur — for example, in a denial-of-service (DoS) attack, a process could consume all available memory or CPU resources, starving the other processes. Thus, an OS also needs to support resource limits and scheduling guarantees.

OS architecture makes the difference here. Microkernel operating systems, in particular, are much better than their monolithic counterparts at ensuring freedom from interference (Figure 2). For example, consider device drivers. In a monolithic OS such as Linux, the device driver software lives in the kernel. Consequently, a fault in one device driver will corrupt or possibly shut down the entire OS. Even from a security perspective this is a serious breach, as compromising the least-secure device driver can give an attacker full control over the OS. In a microkernel OS, though, this isn’t the case. Device drivers operate as regular, minimally privileged, memory-protected processes, isolated from each other (and the kernel) through tried-and-true MMU-enforced hardware protection. If one driver fails, the rest of the system continues to work.

[Figure 2 | Microkernel operating systems enable greater freedom from interference.]

Resource limits and adaptive partitioning

Scheduling contention is another interference issue that can occur when multiple processes share a CPU — and cannot be eliminated by simply assigning priorities. To understand why, consider two processes running at the same priority. Due to a bug, one of the processes goes into an infinite loop and runs forever. The other process won’t be scheduled to run because the first process is consuming all available CPU. The solution may appear to be simple: change the priority so that one process has a higher priority than the other. But this simply moves the vulnerability to a different process. When a bug hits the higher priority process, that process starves other processes of CPU time. To prevent task starvation from ever becoming a concern, system designers can use adaptive partitioning, an advanced technique that guarantees CPU time to sets of processes or threads (aka partitions), while allowing partitions to exceed their time budgets when spare processing cycles are available.

With adaptive partitioning, the designer could, for example, allocate 60 percent of the CPU to one process and 40 percent to another. So, even if both processes run at the highest priority, the OS will ensure that the first process consumes only 60 percent of the CPU and the second 40 percent — exactly as specified. Because this approach is “adaptive,” it can hand out spare CPU time to partitions that could benefit from it. For example, if the first partition isn’t using any CPU, and the second partition needs to perform a lot of work, the OS could let the second partition use more than its 40 percent share, so long as it doesn’t impact the operation of the first partition. The instant the first partition needs CPU time, the OS guarantees that it shall have 60 percent of the CPU. This approach effectively provides freedom from interference on the CPU front, in a way that’s both flexible and predictable.

Advanced operating systems provide fine-grained privilege mapping. Every distinct function provided by the kernel, such as setting the time, attaching to an interrupt, or creating a process, is assigned a privilege level. The OS kernel then limits the processes to the absolute minimum privileges required. Processes can be started with “extra” privileges (in order to, for example, set up hardware mappings and attach to interrupts), which they can then drop once in their steady-state operation. The concept of “running as root” is no longer relevant or acceptable.

Hypervisor

An ISO 26262-certified system can also use a hypervisor. As mentioned, adaptive partitioning allows us to run several processes on the same CPU and to make strong guarantees about their interactions with each other. A hypervisor allows us to benefit from two additional configuration options. In one case, we might wish to combine a certified system with a non-certified system, for example, the rear-view camera and the multimedia player. The multimedia player might be subject to more frequent updates and, since it isn’t a critical system, doesn’t need to be certified. A hypervisor can create a clear separation between the certified and non-certified domains in a simple and cost-effective manner.

The hypervisor could also allow the multimedia player to run on a non-certified commodity OS, such as Linux, while allowing the safety-certified critical software to run on a real-time OS, such as QNX Neutrino. Again, the hypervisor provides an effective barrier between the certified part and the non-certified part. The benefits of using a hypervisor include hardware consolidation (one CPU, one LCD panel) and lower certification costs, resulting in a lower overall system cost while retaining safety certification.

QNX Software Systems

www.qnx.com

@QNX_News

LinkedIn: www.linkedin.com/company/qnx-software-systems